Today, we are open-sourcing our solution for detecting secrets in video content. We use it internally to search videos published on our GitLab Unfiltered YouTube channel for secrets such as API keys and other sensitive tokens.

While there are existing tools for secret detection, we did not find a tool that quite fit the bill for our use case, so we decided to implement a custom scanner. In this blog post, we'll walk through our general approach, some of the challenges we encountered, and our solution. We'll also discuss how GitLab’s new AI assistant, GitLab Duo Chat, helped with the implementation of the scanner.

Scanning videos, one frame at a time

Our general approach to secret detection in videos is quite simple: Split the video into frames, run optical character recognition (OCR) over each frame, and match the resulting text against known secret patterns. If a secret is found, a security incident is kicked off to investigate the leak and revoke exposed secrets.

To implement this approach, we first experimented using FFmpeg for splitting the video into frames and feeding the frames to Tesseract, an open-source engine for OCR. This worked quite well and gave us confidence that the general approach was feasible. However, we decided to switch to Google Cloud Platform's Video Intelligence API for the frame splitting and OCR for the simple reason of not having to scale and maintain our own implementation.

FFmpeg and Tesseract are good options if third-party APIs cannot be used or if more control over the process is required. For example, if the secrets are only exposed for a brief moment in the video, using FFmpeg allows you to increase the frame sampling rate to analyze more frames per second and increases the chances of catching the frame that exposes the secret. The Video Intelligence API does not provide a comparable level of control.

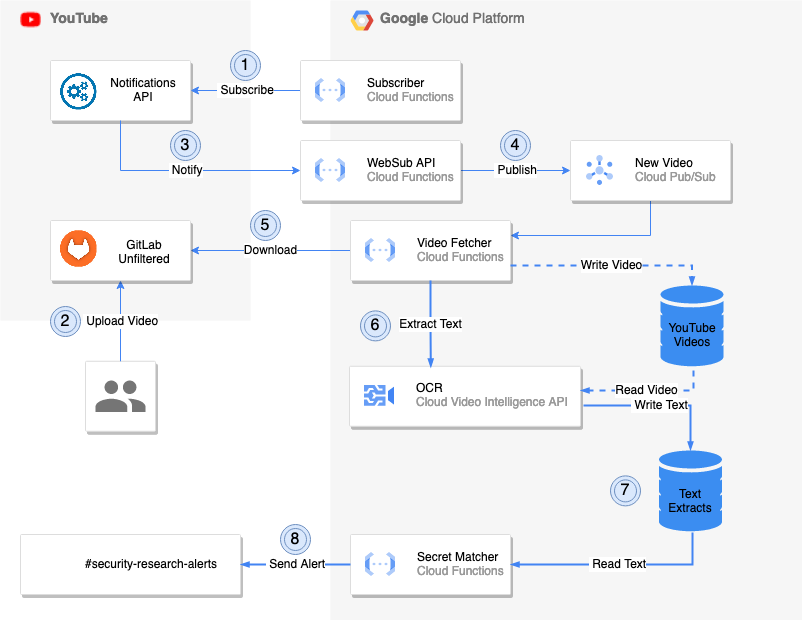

The choice between the Video Intelligence API and FFmpeg + Tesseract also depends on the data set that has to be analyzed. The Video Intelligence API works well on our data set, which makes the additional complexity of a custom implementation based on FFmpeg + Tesseract hard to justify. After settling for the Video Intelligence API, it was a natural choice to host the rest of the scanner on GCP as well. The below diagram gives an overview of the design:

The scanner is implemented as a collection of cloud functions running on GCP. The cloud function WebSub API implements the WebSub spec, which is used by YouTube to deliver notifications. Notifications of new videos are published to a PubSub topic, which the cloud function Video Fetcher is subscribed to. If a message is received, the video is downloaded and submitted for OCR to the Video Intelligence API. The resulting text extract is checked for secrets by the Secret Matcher and alerts are created in case a secret is found.

Accounting for inaccuracies in OCR

The described approach sounds simple enough, but as with most things, the devil is in the details. When comparing the video scanner to other secret scanning methods, a notable difference is how the video scanner determines if a given string literal is a secret. Secret detection tools usually determine if the given text contains a secret by matching the text against a list of regular expressions, each defining the format of a secret. If there is a match, a secret is detected.

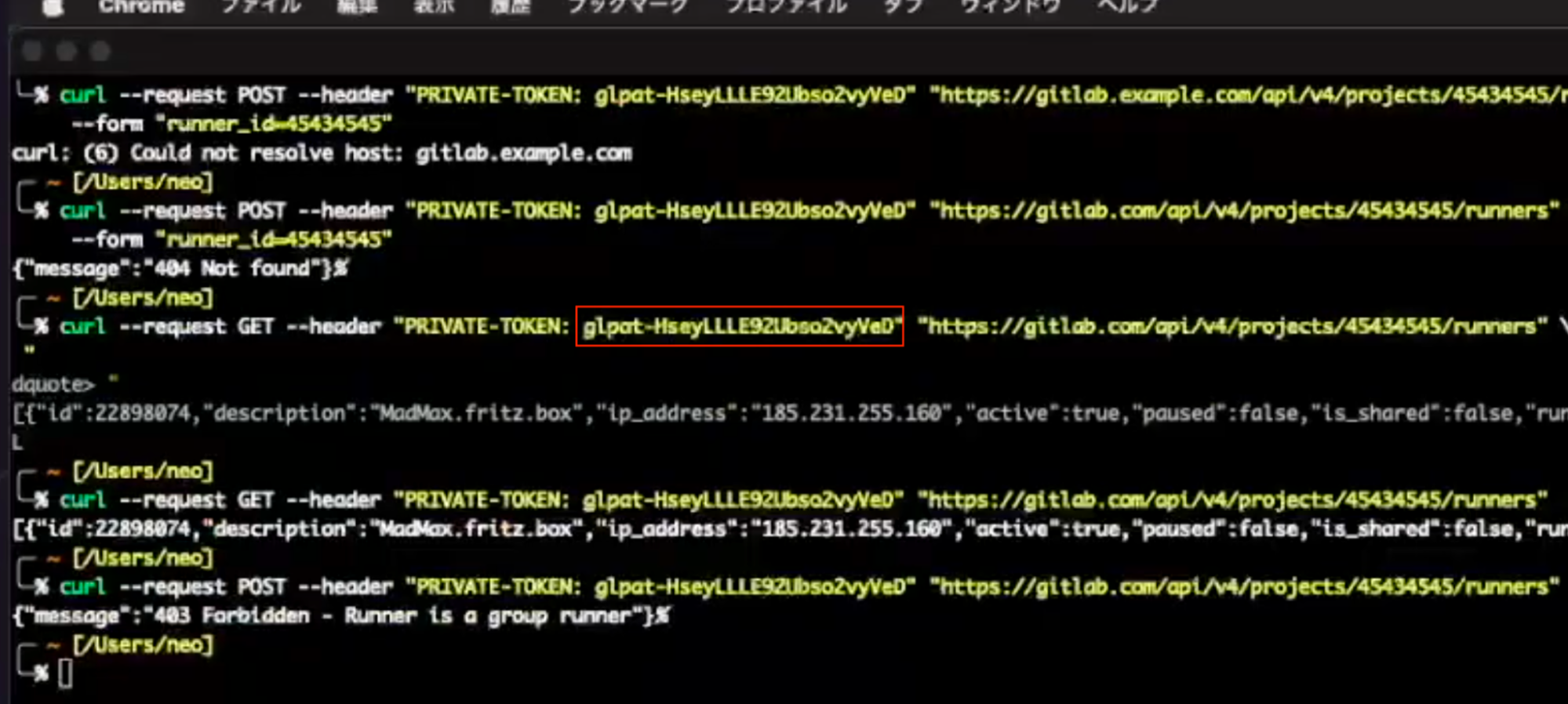

When it comes to video scanning, this approach has limited effectiveness due to the OCR step. In some instances, the recognized text does not quite match the text displayed in the video. For example, the above video frame shows the access token glpat-HseyLLLE92Ubso2vyVeD and OCR extracted the text glpat-HseyLLLE92Ubso2vyVe\. The last character of the secret is D, but OCR extracted a backslash ( \). This error causes the extracted text to no longer match the format of GitLab personal access tokens; therefore, simply matching the text against a regular expression conforming to the token format would have not detected the leaked access token.

To account for the inaccuracies that are introduced by the OCR step, the video scanner uses approximate regular expression matching where a string is not required to match a regular expression exactly, but small deviations in the strings are allowed. These deviations are expressed as string edit distance and define how many characters in the string need to be inserted, deleted, or substituted to make the string match a given regular expression. For example, the string edit distance for the previous example is 1 because the erroneously detected backslash has to be substituted with an alphanumeric character or a minus sign to make the string match the GitLab personal access token format.

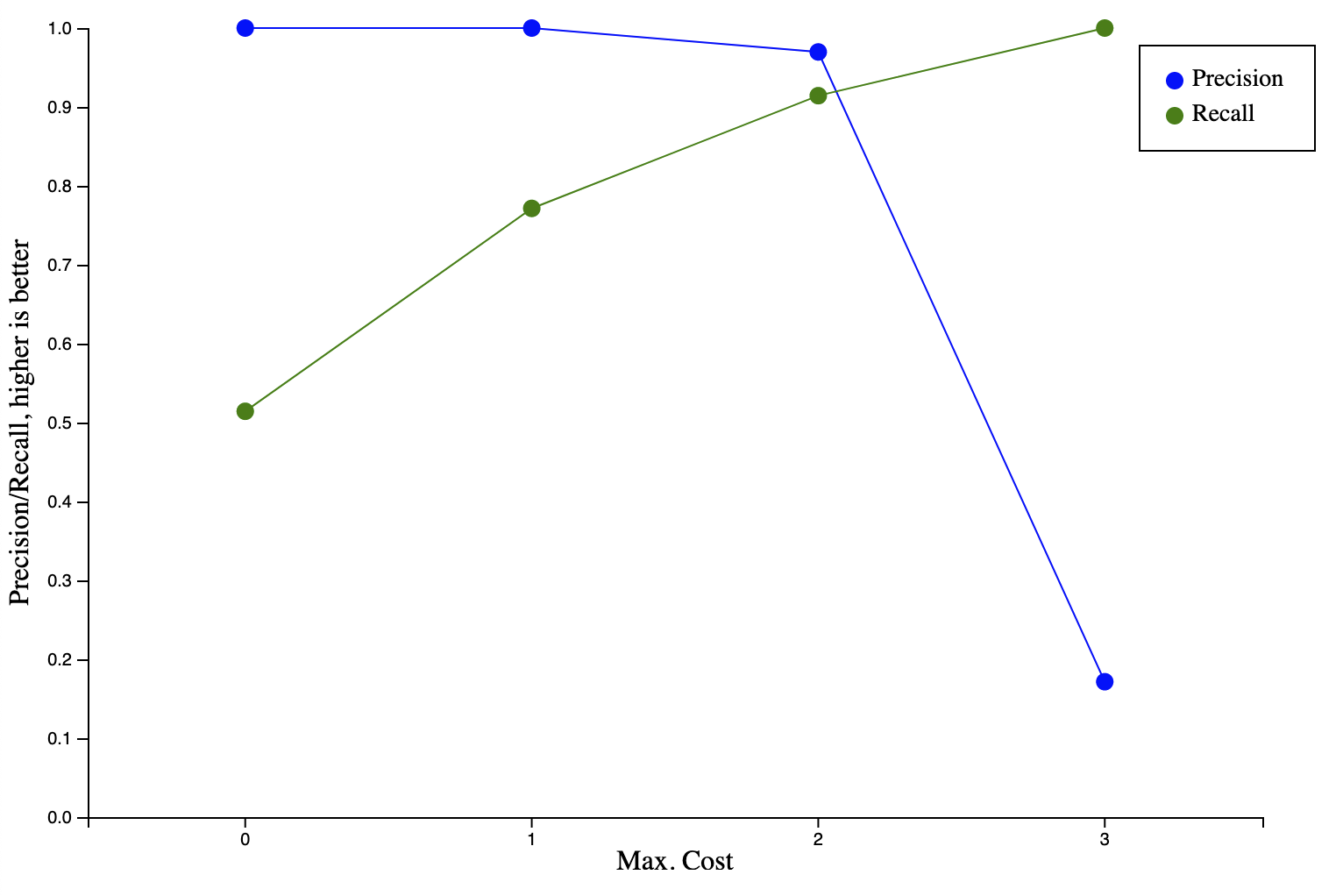

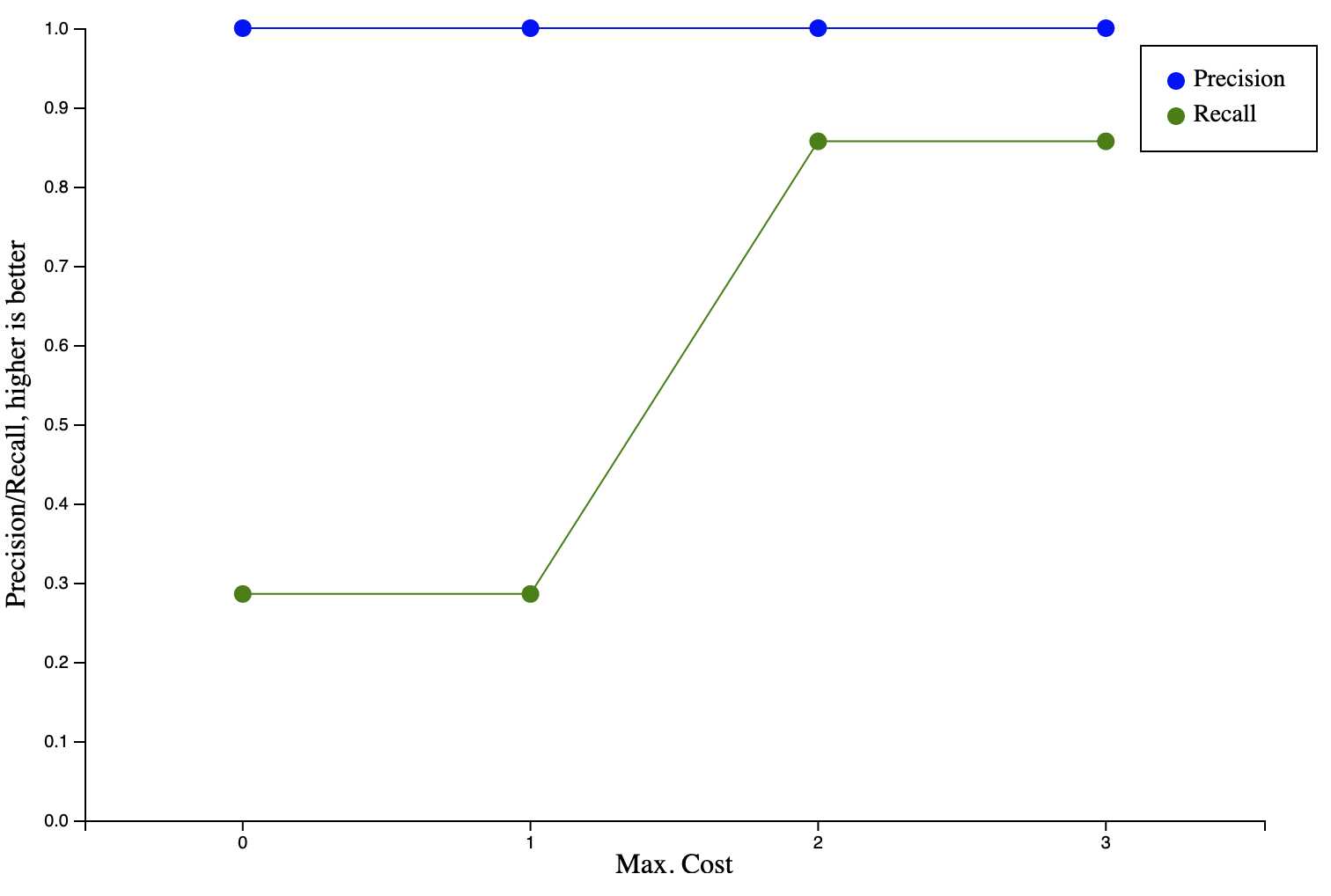

Finding the right value for the maximum edit distance for a string to still be considered to match a regular expression depends on the data set and requires some experimentation. If the value is too low, secrets might be missed and if the value is too high, strings that are not secrets will be matched. To find the right balance for our use case, we collected a data set of videos leaking secrets and ran them through our scanner pipeline. We measured precision (how many matches are not secrets) and recall (how many matches are relevant/true secrets) for different maximum edit distances. We collected this data for the two most relevant types of secrets: GitLab API access tokens and runner registration tokens. The below diagrams show the results.

For both types of secrets, the optimal maximum cost is 2, at which point a high precision and recall are achieved (97% precision and 91% recall for access tokens; 100% precision and 86% recall for runner registration tokens). Decreasing the maximum distance results in worse recall, while increasing the maximum cost leads to either no improvement (runner registration token) or adversely affects precision (access tokens).

Implementation made easy using GitLab Duo Chat

After some initial experimentation, it was clear to us that approximate regular expression matching can increase the detection accuracy of our video scanner. However, the implementation turned out not to be trivial. While the algorithms for approximate regular expression matching have existed since the '90s, there is no widespread library support. TRE is a solid choice but is written in C and thus requires bindings to be called from the scanner’s runtime environment (Node.js).

To reduce the development effort, we turned to GitLab Duo Chat. After discussing our options with Duo Chat for calling C from Node.js, we settled on implementing a C++ add-on. We quickly iterated towards a working implementation, which would have undoubtedly taken considerably more time without the help of GitLab Duo Chat. Below is an excerpt from the chat session.

Me: Are you familiar with the C library TRE? Can you help me write a Node.js addon that is a wrapper for TRE?

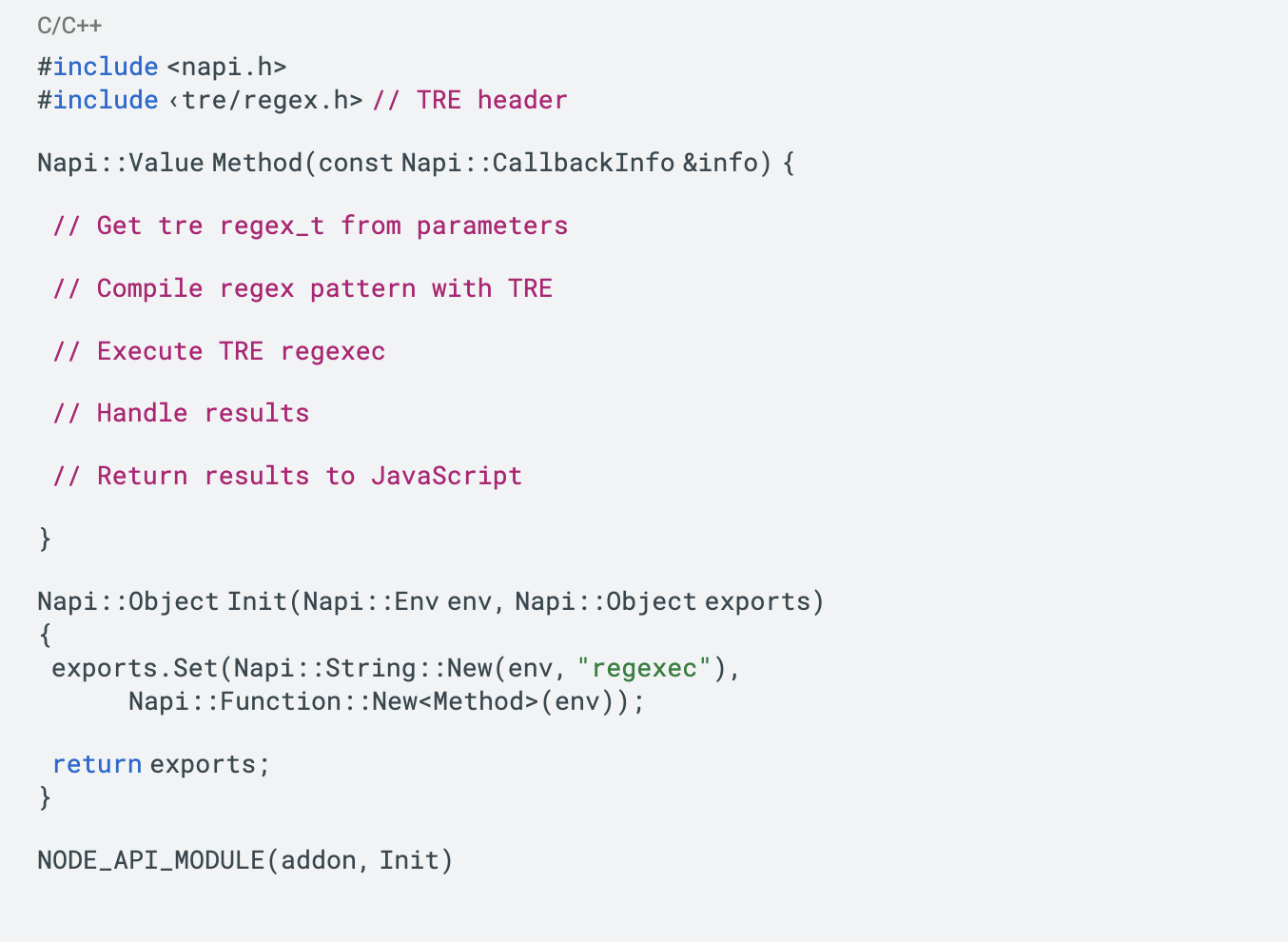

Duo: Here is the template for wrapping the TRE library in a Node.js addon using N-API:

The key steps are:

- Include TRE header

- Get tre regex_t from parameters

- Use tre_regcomp() to compile pattern

- Execute regexec()

- Handle TRE match results

- Return results back to JavaScript

Let me know if this helps explain how to integrate TRE regex usage in a Node.js add-on!

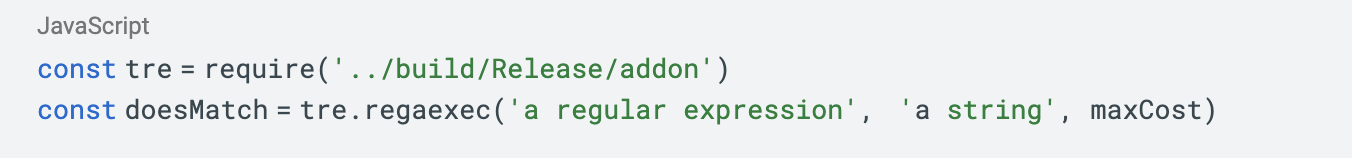

Me: Ok, now let's add code for the tasks that you outlined in the template. I want to call this C++ from JavaScript like this:

In this example, the first parameter is the regular expression that should be used to create the parameter regex_t *preg, the second parameter is the string that should be matched against the regular expression, and the third parameter is an integer that represents TRE’s max_cost.

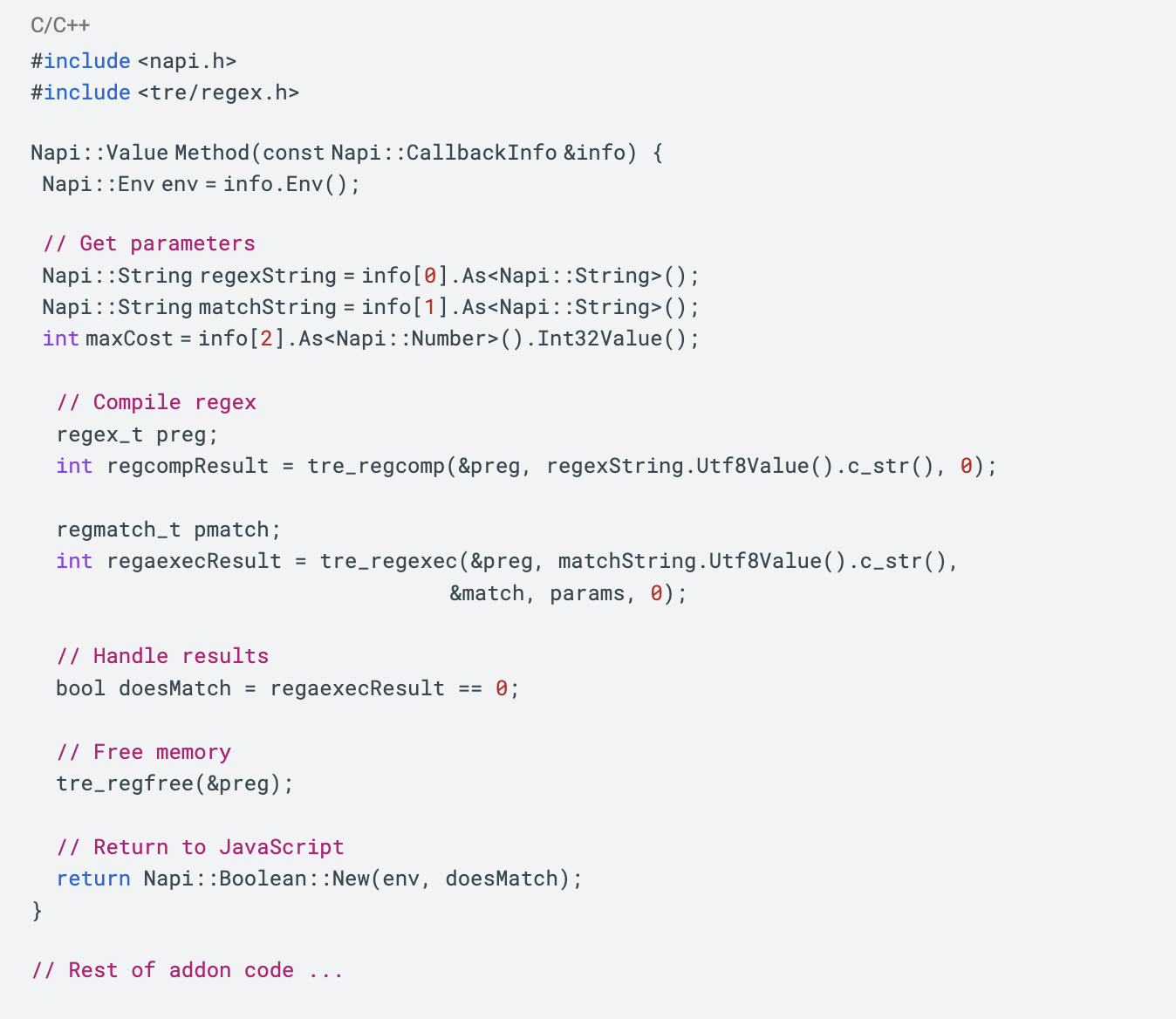

Duo: Here is example C++ code implementing TRE regex usage to match the provided JavaScript code snippet.

Let me know if you need any clarification on this implementation!

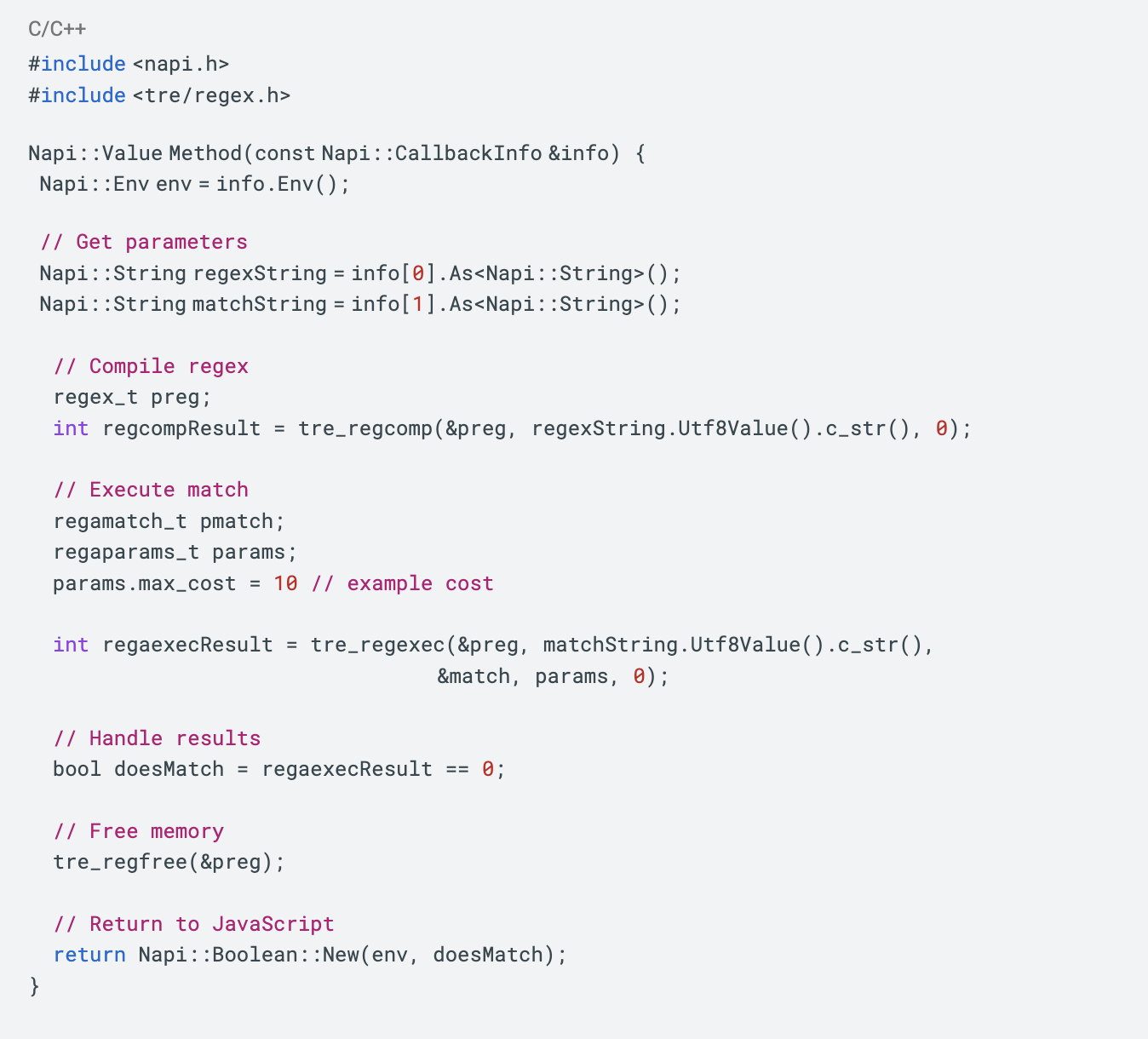

Me: Thank you for the code. Can you change the call tre_regexec to tre_regaexec?

Duo: Here is the code updated to use tre_regaexec instead of tre_regexec:

The key changes are:

- Using

tre_regaexecinstead oftre_regexec- Initializing a

regamatch_tinstead ofregmatch_t- Passing a regaparams_t struct to configure match parameters

Let me know if you need any clarification or have additional questions!

While the code generation capabilities are very impressive and save a lot of time, the generated code needs review and sometimes correction. For example, in our session with Duo Chat it is prompted to call tre_regaexec, but the generated code is calling the similarly named, but distinct function tre_regexec. Typically it is enough to make Duo Chat aware of its mistake and it will correct the code in question. In this respect, working with Duo Chat feels like pair programming rather than fully outsourcing a programming task.

The full add-on code is available here.

Try our open-source implementation

We are making the implementation of the scanner open source under the MIT license. We hope this solution can help you with detecting secrets in your own video content. Please share your feedback in an issue.